Against AI in images and writing

Creativity, humanity, and authenticity are essential for every one of us, and for our collective functioning as a society.

Generative AI is transformative technology. As long as those big machines keep running, the way we do things will be extremely different than the way it was before this tech existed.

AI makes some tasks more efficient, and easier. Along with that ease, we are witnessing a corruption of the historical record and a dilution of our own humanity.

We’ve all seen various kinds of AI-generated horrors, so please humor me as I share the AI-generated image that nudged me over the brink.

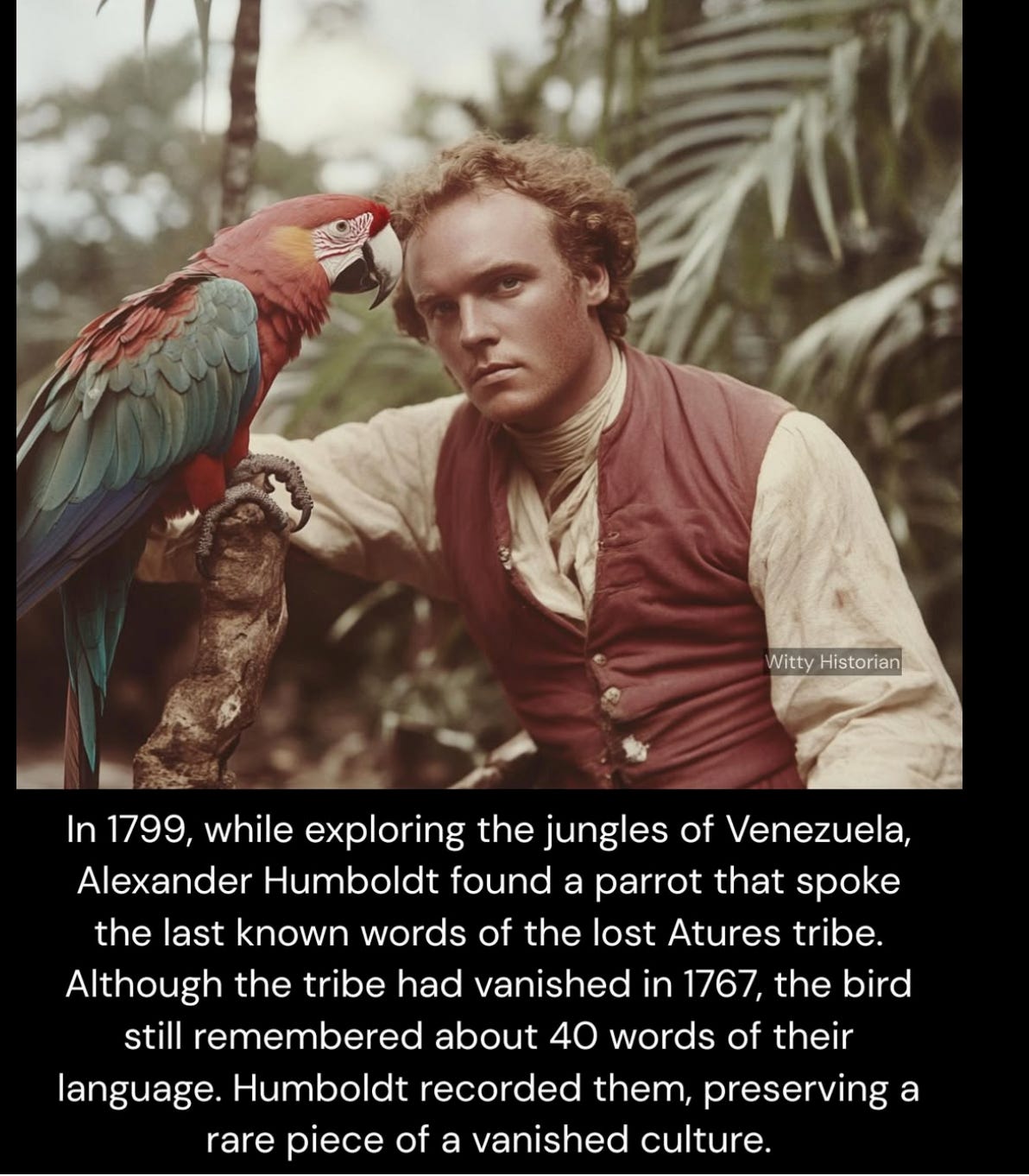

I was checking in on Facebook and there was some post from a ‘witty historian’ account that meta’s algorithm thought I should see. It’s at the intersection of history, natural history, and tropical biology, so in that sense, the algo knows me. (To be clear, I’m also getting consistent ads for bras made for people in the itty bitty titty committee, so the algo isn’t batting 1.000). There was some text about Alexander von Humboldt, a germinal figure in the fields of biodiversity, biogeography, climatology, and more. It was accompanied with this image and text:

Take a look at this image. Read the caption, and look at the year. It is clear to most of us, I think, that this is not a photograph of Humboldt. But it sure looks like it could be. The only reason that we know for an actual fact that it’s not a photograph is that photographic technology like this did not exist in 1799. So either this is a photograph of another guy from a later period of time, or it’s an image generated by AI based on a detailed prompt. I imagine that it’s the latter, and that anybody with a light interest in the forensics of photography could find hundreds of reasons why this is not an actual photo from that era. (The biggies I think is that there’s no way such an old image could have that kind of illumination or such a crisp in-focus image with that narrow depth of field.) I declare this post to be fake AI trash.

But so what, right? Right now everything on social media is filled with AI slop, so why should we be expecting anything else?

That is the problem. When we are encountering information, the default expecation is that at least some elements of it are invented. We can’t rely on anything being fully factual anymore. How many children know when photographic techniques were developed? While the idea of a “post-truth” era was in vogue when Trump and other Republicans could lie without any fact-checking from major media outlets, it’s clear that we really are in a time of post-truth. Photographs in the paper may not be real photographs, news reports may not be written by people and may contain egregious factual errors, and they may even be attributed to authors who are people who have never existed. And these untrue things are presented as if they were true, and there’s no labeling to distinguish between falsity and reality.

Historians will now have to draw a clear line in the early 2020s. Before that line, if there is a piece of writing, one will be able to assume that it was written by a person, and that the choices made in the composition were choices made by people. If there are things that are wrong, then it’s because the person was wrong or chose to deceive. After this line, there’s no guarantee whatsoever that a human being had a hand in crafting word choice, ideas, or any of that. In some websites and publications, we don’t even have any assurance that an editor made the choice to include the information. It all could be made up. Because of generative AI, source documents for historians now have a post-truth line. You can’t even trust photos or videos, much less any written accounts.

We’ve always had people believe lies and give way to magical thinking. In the Reagan era, for example, lots of folks believed in the fairy tale of trickle-down economics, bought into lies about gay people and AIDS, and even let the President get away with lies about arms trafficking and overthrowing foreign governments. Even though these lies were very popular, the factual record at the time was very clear about the lies. What’s different now is that generative AI fabrications are so widespread that telling lies is no longer considered to be problematic. The power of authoritarian regimes has always involved the use of disinformation to inure the public to untruths. Now that our media environment has done this for everybody, this serves to empower authoritarian efforts. I’m not saying that generative AI is reponsible for the rise of our current fascist swing in the United States and elsewhere, but it sure it making things easier for the bad guys. The proliferation of generative AI allows malefactors to generate false information with the support of falsely generated support material, on a scale far beyond the kinds of lies that Colin Powell shared with the UN in the fabricated pretext for the war in Iraq. We’ve always had folks who are pushing alternative realities, such as conspiracy theorists, people who believe in woo-woo medical hokum, and liars like Newt Gingrich and Rush Limbaugh who regularly fed deceptions to the public. However, at that point in time, it was straightforward to fact-check these folks because there were objective facts. Now our newspapers can’t even be bothered to fact-check the lies spread by our leaders.

Because there is so much false information in the media environment supported by generative AI slop, we have collectively lost the expecation that the information we see will be true. Generative AI turbocharges the ability to develop alternative realities.

While I think generative AI is a scourge because it can be used to generate false information, intentionally and otherwise, I think it’s just as dangerous because generative AI is a substitute for human creation, which is a dilution of our humanity.

There is a difference between using AI as a tool, using AI as a means of creation. I’m okay with machine learning and AI to be used to get various kinds of stuff done. But I’m not okay with the use of AI to create something that is designed to look like it was the craft of a human being.

For example, when I look at LinkedIn (yes, ugh, I know), I’ll see a ton of posts by corporate dittoheads selling their own variety of workplate philosophy hokum and it’s accompanied by an obviously AI generated image. It could be a photorealistic rendering of people doing something, it could be piece of art that looks hand-drawn-ish, or a cartoon or schematic. They created this image to accompany whatever they wrote (if they wrote it, who knows nowadays?), because images that go along with posts get more eyeballs1. I think that using an AI-generated piece of “art” to accompany writing devalues the writing itself. If the author or editor thinks that a machine can create the image to illustrate the point, this leads me to suspect that the machine can also create the primary content itself.

The use of generative AI in writing and art is creating human creation as “content.” I don’t want to read content that wasn’t made by people. If I’m going to be looking at a piece of art, I want every bit of its creation to be the collective product of decisions made by a human being. If I read a piece of writing, I want it to be the compilation of thoughts that all came from the decisions made by a human being. No, the writing of a detailed prompt that instructs generative AI what to put inside a visual image is not the creative work I’m talking about.

If you want a hand-drawn image of a wombat, then draw it yourself. If you can’t draw it yourself, then you could still draw it yourself. Really, you can, you might not like it, but that’s what creation is about. If you want a higher quality hand-drawn image that you aren’t capable of creating, then what you do is enlist the services of the person who is capable of doing it. By having generative AI create this image for you, you’re intentionally accepting art that is the melded composite of work done by others that has been illegally used to train the a robot. If you go through life seeing art, essays, science, and literature as “content” rather than an expression of our human existence, are you really living, or are you just processing content like a robot?

When we replace creative work with the content of generative AI, we are shitting on creators and denigrating the humanness of creativity.

Yes, it’s possible for generative AI to create a piece of art that we might not be able to distinguish from one that was created by people. You might ask, then, what’s wrong with doing it with AI if the product is functionally the same? My response is, do you have a computer make love for you, do you have a computer read books and watch movies for you, do you have a computer play frisbee with your children for you?

I’m not unilaterally against ML and AI. I think there are huge applications for AI when it comes to automated processes. I’ve been on the receiving end of messy datasets and I’ve used AI to handle rote cleaning processes that would have taken me days of clicking or coding. I also use AI to proofread code to run statistics, because it’s a lot more adept at finding missing commas or syntax errors. Heck, I’ve even used AI to generate code for R, and I make sure that I understand it before I run it, but writing it would take me days and AI can do it in moments. I understand that this is me bypassing the creative process of writing code to do my stats. In this case, I think the coding is a tool to get the machine to run the statistical tests of my choice, which I don’t think robs me of my humanity. If I asked the AI to develop an analysis and interpret the results for me, that’s clearly past the line I’ve drawn for myself. I also have used AI to identify typos and other copyediting errors in my writing. I think that’s fine, even if chatGPT is not so good at it, and identifies many stylistic choices of mine as errors2.

Another useful tool for conservation biology is to find when and where rare animals are living in the environment, by AI to sort through hundreds of thousands of camera trap images that would take many hours for humans to do the work. People can make decisions about what constitutes a sighting, but to find the images that may have the sighting, I think pattern recognition of AI can help. Likewise, when I’m learning how to identify birds, I’m glad to be able to use the AI built into Merlin to recognize the songs around me, and to look at a photograph to let me know which species I might have in front of me. If my goal is to identify the bird, then this is a useful tool.

But if I’m out birdwatching, to enjoy nature and to enjoy my day as a human being crossing through this earth? Then I’m not going to be anchored to Merlin. I’ll be listening to birds, watching the birds, talking about the birds, thinking about the birds. I might ask Merlin to help me figure it out but the AI is not birdwatching for me. If I want data on birds for a research question and AI can help, that’s great. But if I’m having the experience of watching birds for the sake of watching birds, then AI might be a tool. But if AI is central to the experience, then I would find myself living an unexamined and incomplete life.

Ultimately, think the argument against AI in creative human endeavors is essentially the same argument that we’ve been having against plagiarism for many decades. Copying your text from someone else’s writing is having other people doing the thinking on your behalf. Just like having AI create for you is offloading the creative human experience to the machine. If you happen to think of your schoolwork or essay as a compendium of other work, and don’t need to regard it as the development of information from your own mind, then I suppose plagiarism is fine if you see it as “content.” But if we are writing to share ideas, then plagiarism is letting other people do the other thinking for you, just like AI is letting the robot think for you.

Generative AI simply turbocharges the capacity for us to outsource our creativity and to forgo the the process of being human.

You’ll notice that every single post I have on this site has an image to go with it, and maybe you’ve noticed that I’ve been sure to never use AI (even if I might go with a licensed image from a stock library from a human being who took a photograph of something real).

I suspect that one piece of evidence that this is my own work is that it’s poorly copyedited. I wrote it in a couple hours, gave it a breezy read, and then clicked on ‘publish.’ The only way I can manage to write regularly for this newsletter without it taking up my entire working life is to just do it. I think there’s are tradeoffs among sponteneity, productivity, and quality of the ideas. If I sat on every post until I thought it was perfect, I’d barely publish anything here!

Every human artist/musician that I know and myself included won't stop making physical, tangiable art. My photos on substack are taken by myself and my drawings are by hand. graphics unless otherwise referenced are made with actual data and data points from physical samples I collected geo-referenced in time and space. Real art, real science.

Thanks for this thoughtful post Terry. Preserving artistic and acientific integrity is important!

I tell my students, when you take an exam, write a paper remember your written (hand) words are and ahould be yours. own them.

The silver lining of AI content is that it will hopefully encourage people to question the veracity of everything they read, hear, and see, IMHO. A healthy dose of critical thinking and scepticism is always a good thing. "Truth" is always hard to nail down, and its wise never to forget it. I'm suspicious of too easily trusting something because its authoritative. The dark edge to the silver lining is that sometimes it means people can give up and not believe anything.